If it comes to on-page optimization, you should understand that it has its ends and oats. Any web store is expected to have a complex structure and an enormous number of pages. Let’s have a closer look at details which deserve careful attention.

Read also: Key Seo Tips for any Ecommerce Business.

Technical configuration for a webstore

Robots.txt

The file defines which webpages should be indexed. As a result, the pages indexed can be shown as a search result, but the pages which are not indexed will not be shown. Nevertheless, it should be mentioned that pages unavailable for indexing can be found by search crawlers. That’s why you should protect the pages containing private data with a strong password.

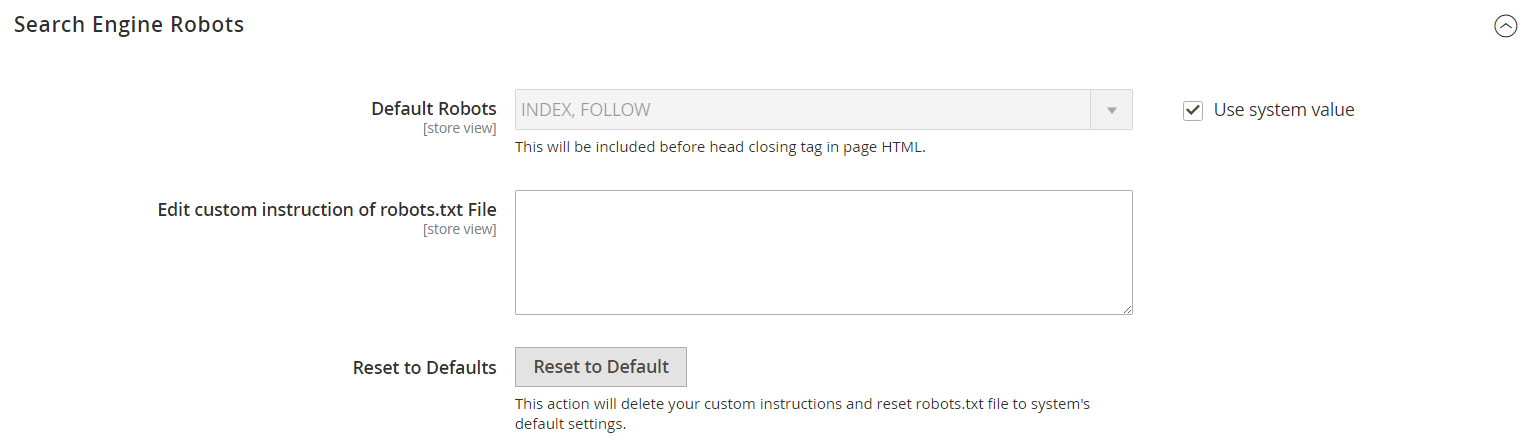

If you’re on the stage of website development, Robots.txt needs to be Noindex and Nofollow. After going the website live, don’t forget to change the parameters for Index and Follow. In Magento 2.2.x it looks like that:

The file Robots.txt contains the list of search systems which are allowed to index the website. If you want to make your website available for indexing by all search systems (I suggest you do just that), use the following way:

|

1 |

User-agent: * |

Then you need to define which pages should be unavailable for indexing and use the Disallow directive for it. For web stores, there will be the following categories:

- admin pages

|

1 |

Disallow: / admin / |

- the general technical Magento directory

|

1 2 3 4 5 6 7 8 |

Disallow: / app / Disallow: / downloader / Disallow: / errors / Disallow: / includes / Disallow: / lib / Disallow: / pkginfo / Disallow: / shell / Disallow: / var / |

- the shared files Magento

|

1 2 3 4 5 6 7 8 9 10 11 |

Disallow: / api.php Disallow: / cron.php Disallow: / cron.sh Disallow: / error_log Disallow: / get.php Disallow: / install.php Disallow: / LICENSE.html Disallow: / LICENSE.txt Disallow: / LICENSE_AFL.txt Disallow: / README.txt Disallow: / RELEASE_NOTES.txt |

- the page subcategories that are sorted or filtered.

|

1 2 3 4 5 |

Disallow: / *? Dir * Disallow: / *? Dir = desc Disallow: / *? Dir = asc Disallow: / *? Limit = all Disallow: / *? Mode * |

- links from the session ID

|

1 |

Disallow: / *? SID = |

- the page checkout and user account

|

1 2 3 4 5 |

Disallow: / checkout / Disallow: / onestepcheckout / Disallow: / customer / Disallow: / customer / account / Disallow: / customer / account / login / |

- the general technical directories and files on a server

|

1 2 3 4 5 |

Disallow: / cgi-bin / Disallow: / cleanup.php Disallow: / apc.php Disallow: / memcache.php Disallow: / phpinfo.php |

Product pages are considered as the most significant, that’s why it’s important to make it available for indexing. But try to avoid page duplicates and its indexing.

Besides, Google should have access to script files placed in the /js/ directory, so make it also available.

As for the Robot.txt. file itself, it should be placed in the website root directory (as a rule, the homepage has the same location).

.htaccess

.htaccess (hypertext access) is a file which allows centralizing the config server management. With the help of the file, you can control cache and configure redirects of your store.

The file is of great importance for SEO, so it’s necessary to put to its proper use and follow up:

- Combine the site with “www” and without. If you don’t do that, search engines will define your website as two different ones. Consequently, they will compete with each other and no one gets ranked well. Besides, it can lead to a lot of content duplicated that negatively affects ranking. As a result, you’ll receive less traffic.

- Redirect index to root. This is a common mistake when clicking the button “Home”, it’s redirected to site.com/index.php or site.com/home.html. It creates unnecessary duplicates, but it shouldn’t be like that. The main page of the website should be combined with similar variants (/home, /index).

- Make 301 redirect from the pages that display 404 error. Previously these pages could contain useful information and some resources you can’t change (websites, whitepapers, documents downloaded by users) still make reference to them. So you need to make 301 redirect to the most relevant pages.

- Compress files/caching to increase speed rate.

- Rewrite simple dynamic URL. URLs should be SEO-friendly, that means website.com/clothes/dresses, so looking at the URL you can define what the page is. Besides, you need to configure clean URL generator without additional parameters (http://www.site.com/products.php?pn=9021, www.site.com/nice-looking-hat.php).

Semantic markup

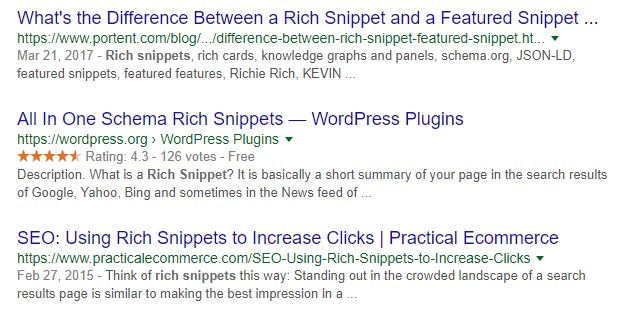

Semantic markup is a machine-friendly language with the help of which you can define the way the information is displayed. Moreover, with the tool, you can improve the look of the website in search results by creating so-called rich snippets. It allows increasing traffic even if the site is not well ranked.

Let’s compare some Google results. The most marked site (in this case, thanks to stars) has more traffic even if it’s ranked worse.

The most efficient and easiest way to make the website ranked better is using Google structured data and schema.org. As for web stores, the most significant tools are product schema and review schema (Products and Reviews for Google respectively).

You can check your website structured data by using the following Google tool.

If you put semantic markup to good use, your website will be shown in the best way not only in search results but on other websites. For example, using Twitter Cards simplifies tweeting and allows increasing the number of characters (by the way, now 280 characters are allowed).

Header tags

When designing a webpage, it’s difficult not to use headers, especially when the page contains much content. Previously, the headers H1–H6 played the major role in SEO, but now it’s not like that. When using headers, the only thing you need to focus on is the common logic of the page.

Headers should be used according to their hierarchy in priority sequence with no header missed. For example, H1 is a page header while remaining headers are considered as subheaders. If the page contains 2 headers, let it be H1 and H2, but not H1 and H3.

As for text size, it’s better when it’s changed by using CSS styles, but not headers.

Moreover, headers should express the main idea of the text block it relates to and helps users navigate the page.

Hidden content

If some items are not in stock and you don’t know exactly when they are available again, it’s better to get them hidden.

Site performance

When it comes to site performance, its load speed and display in the user’s browser are meant. The higher site performance is, the better impression it makes on the user. Consequently, the sites with low rate speed are penalized by Google.

There are some points which influence the speed rate:

- browser/server cache;

- image optimization;

- encryption (SSL).

See also: How to Reduce PNG Image Size.

In order to improve site performance, use the following methods:

- multi-layered cache;

- easier design;

- asynchronous communication with server-side components.

See also: What Methods of Speed Optimization Do I Know.

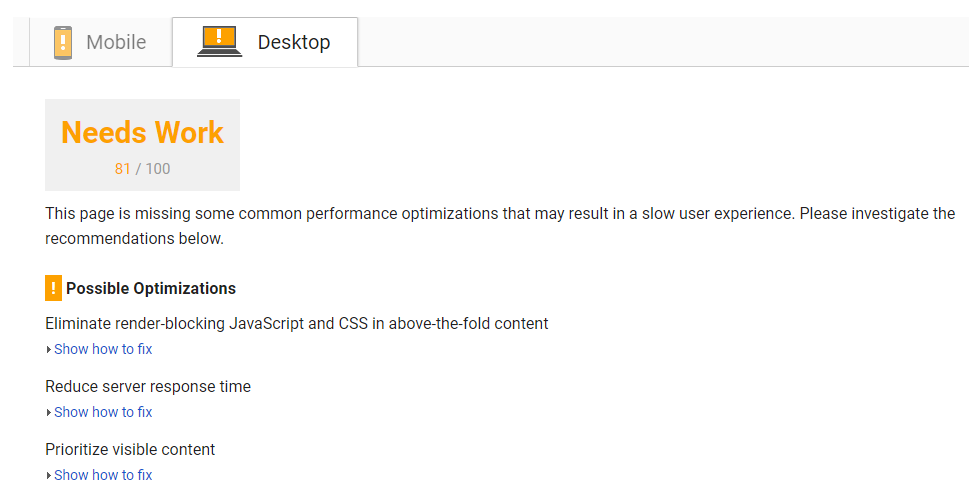

Moreover, you can check your website speed rate by using the free Google tool PageSpeed Insights. Both desktop and mobile versions are checked. Besides, you’re suggested the way how to improve it.

The article is aimed at providing the common principles of on-page optimization suitable for every web store regardless of the platform it runs on (Magento, Prestashop, WordPress, etc.). Next article will be dedicated to a detailed explanation of on-page optimization for Magento web stores. Follow our blog and stay tuned!